Elon Musk’s Clarifications on Upcoming Progress and Challenges

In a recent statement, Elon Musk lifted the veil on several key aspects of the development of artificial intelligence (AI) at Tesla. Here is a recap of the highlights:

- Thousands of Nvidia chips originally ordered for Tesla were shipped to 𝕏 and xAI because there was no room to use them.

- The GigaTexas South Expansion is almost complete, designed specifically for intensive computing power and cooling.

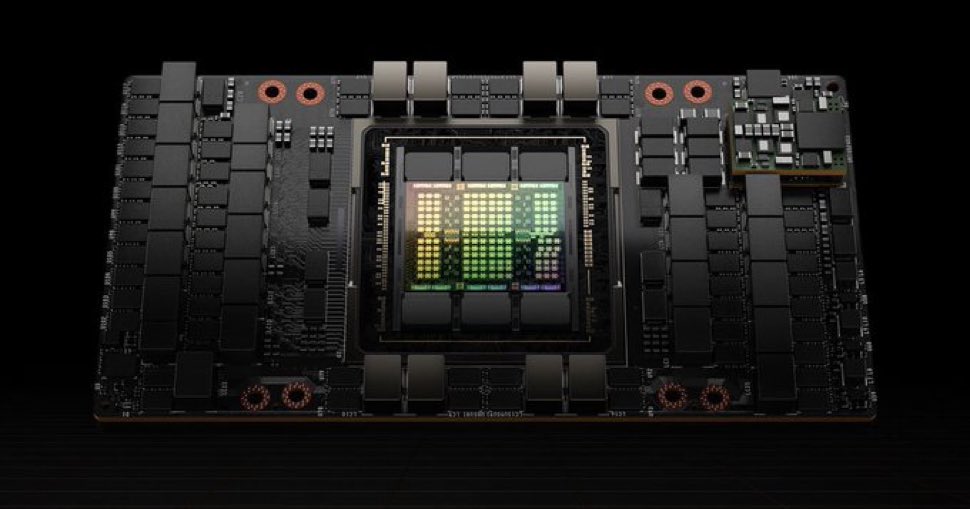

- The expansion will accommodate 50,000 Nvidia H100 GPUs, as well as 20,000 Tesla HW4 AI computers and ample video storage for FSD training.

- Tesla plans to spend about $10 billion this year on AI, including $5 billion on inference hardware and Dojo, and $3 billion to $4 billion in Nvidia purchases.

- Getting Dojo to overtake Nvidia will be a challenge, but it’s possible.

- The training calculation for Tesla is relatively small compared to the inference calculation, because the latter scales linearly with fleet size.

The Breakdown of Tesla’s AI Spending

One of Musk’s notable announcements concerns Tesla’s planned spending in the field of AI. With a budget of approximately $10 billion, here is how this sum will be allocated:

- About $5 billion will be for internal development, including inference hardware and Dojo, Tesla’s supercomputer.

- Between $3 and $4 billion will be used to purchase Nvidia chips.

These colossal investments demonstrate Tesla’s commitment to remaining at the forefront of technological innovation. “Inference hardware” here refers to the hardware systems necessary to enable previously trained neural networks to make predictions based on new data.

The Dojo Challenge: Surpass Nvidia

Elon Musk also addressed the monumental challenge that Project Dojo represents. Dojo is the supercomputer developed by Tesla, intended to train deep learning algorithms more efficiently than the powerful Nvidia chips currently in use. Musk acknowledged that it would be difficult to make Dojo superior to Nvidia, but he said it was entirely possible through continued effort and constant innovation.

Increased Inference Computing

On the other hand, the training calculation remains relatively small compared to the inference calculation at Tesla. This is because inference, i.e. the application of AI models to make decisions in real time, grows linearly with the growth of the Tesla vehicle fleet. In other words, the more Tesla vehicles there are on the road, the greater the need for inference computing, because each vehicle uses AI for tasks like autonomous driving (Full Self-Driving, or FSD).

Conclusion

Elon Musk’s recent clarifications offer valuable insight into Tesla’s ambitious plans in the field of AI. The massive funding commitment, combined with the technical challenges of Dojo and the linear increase in inference computing, highlights the strategic importance of AI to Tesla. These developments will certainly be ones to follow closely in the months and years to come, as they are sure to influence not only Tesla, but also the entire automotive and technology industry.

In summary, the future of AI at Tesla looks promising and complex, where each advance could redefine current market standards.